Can SARSA algorithm improve our trading strategy?

Leveraging a fundamental Reinforcement Learning algorithm for investing

In this post I will be trying out a Reinforcement Learning algorithm called State–action–reward–state–action (SARSA), which aims to learn an optimal policy for an agent interacting with an environment. In our case, the rewards will be stock returns, but these can take various forms, including points in a video game, etc. If you want a more detailed explanation on why Reinforcement Learning can be a useful tool in trading, I recommend reading my previous post below where I use Q-learning to improve the performance of a range of machine learning models.

SARSA is similar to Q-learning but also different in several key ways. Firstly, SARSA is an on-policy algorithm, meaning it learns the Q-values for the current policy being followed. This is different from off-policy algorithms like Q-learning, which learn the Q-values for an optimal policy regardless of the current policy. Here is an analogy: Q-learning would try to learn how to cook by watching Gordon Ramsay, while SARSA would try out some recipes on its own and see what the results are.

This means that the optimal policy derived from SARSA's Q-values might be different from the optimal policy found by Q-learning.

Harnessing Reinforcement Learning to Enhance our Trading

This post will focus on the application of Reinforcement Learning to create an intelligent trading strategy. In my previous posts, I used various machine learning models to predict the next day’s returns for a particular stock. In all those cases, I set it up as a classification problem: days where the stock’s performance was positive would return a 1, …

As usual, I provide the full code and encourage you to try it out and experiment with it. Let’s start things off with the code below: we pull the data for Mastercard (ticker: MA) and set up some features that will be used by the models to make predictions. We also scale the data and install all the necessary libraries.

Just like in the Q-learning example we will train the models on the training dataset, then have these models make predictions on the validation dataset. We will then train the SARSA algorithm on these predictions. Finally, we will compare the performance of the models by themselves on the test data as well as SARSA’s performance.

import yfinance as yf

df = yf.download('MA').reset_index()

import pandas as pd

import numpy as np

seed=42

import os

os.environ['PYTHONHASHSEED'] = str(seed)

np.random.seed(seed)

import random

random.seed(seed)

#Tweaking the fonts, etc.

import matplotlib.pyplot as plt

from matplotlib import rcParams

rcParams['figure.figsize'] = (18, 8)

rcParams['axes.spines.top'] = False

rcParams['axes.spines.right'] = False

# Feature deriving

# Distance from the moving averages

for m in [10, 20, 30, 50, 100]:

df[f'feat_dist_from_ma_{m}'] = df['Close']/df['Close'].rolling(m).mean()-1

# Distance from n day max/min

for m in [3, 5, 10, 15, 20, 30, 50, 100]:

df[f'feat_dist_from_max_{m}'] = df['Close']/df['High'].rolling(m).max()-1

df[f'feat_dist_from_min_{m}'] = df['Close']/df['Low'].rolling(m).min()-1

# Price distance

for m in [1, 2, 3, 4, 5, 10, 15, 20, 30, 50, 100]:

df[f'feat_price_dist_{m}'] = df['Close']/df['Close'].shift(m)-1

# Relative Strength Index (RSI) - 14 days

def calculate_rsi(series, window=14):

series_copy = series.copy() # Work with a copy

diff = series_copy.diff(1)

gain = np.where(diff > 0, diff, 0)

loss = np.where(diff < 0, -diff, 0)

avg_gain = pd.Series(gain).rolling(window=window, min_periods=14).mean()

avg_loss = pd.Series(loss).rolling(window=window, min_periods=14).mean()

rs = avg_gain / avg_loss

rsi = 100 - (100 / (1 + rs))

df.loc[:, 'feat_rsi_14'] = rsi # Use .loc to explicitly modify the DataFrame

# Call the function with the 'Close' column

calculate_rsi(df['Close'])

# Price change over the last 5 and 10 days

df['feat_price_change_5'] = df['Close'].pct_change(periods=5)

df['feat_price_change_10'] = df['Close'].pct_change(periods=10)

# 1 day performance

df['pct_change_future'] = df['Close'].pct_change().shift(-1)

# Calculate cumulative growth of $100 investment

df['Change_100_Investment'] = (1 + df['pct_change_future']).cumprod() * 100

# Adding a new column 'target' based on pct_change_future

df['target'] = np.where(df['pct_change_future'] > 0, 1, 0)

df['returns'] = df['Close'].pct_change().shift(0)

df = df.dropna()

# Define the date ranges for training, validation, and testing

validation_start_date = '2015-01-01'

validation_end_date = '2019-01-01'

# Split the DataFrame into training, validation, and testing sets

df_train = df[df['Date'] < validation_start_date].reset_index(drop=True)

df_val = df[(df['Date'] >= validation_start_date) & (df['Date'] < validation_end_date)].reset_index(drop=True)

df_test = df[df['Date'] >= validation_end_date].reset_index(drop=True)

feat_cols = [col for col in df.columns if 'feat' in col]

df_train = df_train.dropna()

df_val = df_val.dropna()

df_test = df_test.dropna()

from sklearn.preprocessing import StandardScaler

scaler = StandardScaler()

x_train = df_train[feat_cols]

x_train_scaled = scaler.fit_transform(x_train)

x_train_scaled_df = pd.DataFrame(x_train_scaled, columns=feat_cols)

x_test = df_test[feat_cols]

x_test_scaled = scaler.transform(x_test)

x_test_scaled_df = pd.DataFrame(x_test_scaled, columns=feat_cols)

x_val = df_val[feat_cols]

x_val_scaled = scaler.transform(x_val)

x_val_scaled_df = pd.DataFrame(x_val_scaled, columns=feat_cols)

x_train = x_train_scaled_df

x_test = x_test_scaled_df

x_val = x_val_scaled_df

y_train = df_train['target']

y_test = df_test['target']

y_val = df_val['target']

!pip install hmmlearn

!pip install dask[dataframe]

!pip install catboostNext, we will create various models that will try to predict whether next day’s price will be higher than today’s. We will use the same models from the Q-Learning post but I also add the Hidden Markov Model from my latest post. Below are the links to my previous posts that go into more detail on the models used and how those models can perform by themselves.

The Golden Age of Forecasting: Machine Learning and Gold Price Prediction

Exploring the performance of different Machine Learning strategies on Visa stock

# Import necessary libraries

import xgboost as xgb

from sklearn.metrics import accuracy_score

from xgboost import XGBClassifier

from sklearn.ensemble import RandomForestClassifier

from catboost import CatBoostClassifier

import lightgbm as lgb

from lightgbm import LGBMClassifier

params = {

'objective': 'binary:logistic',

'eval_metric': 'logloss',

'random_state': 42,

'learning_rate': 0.05,

'nthread': -1,

'max_depth': 3,

}

xgb_model = xgb.XGBClassifier(**params)

xgb_model.fit(x_train, y_train)

# Predictions on test set

y_pred_xgb = xgb_model.predict(x_test)

rf_model = RandomForestClassifier(

n_estimators=100,

max_depth=5,

random_state=42,

class_weight='balanced',

n_jobs=-1,

)

rf_model.fit(x_train, y_train)

# Predictions on test set

y_pred_rf = rf_model.predict(x_test)

catboost_model = CatBoostClassifier(

iterations=100,

learning_rate=0.001,

depth=3,

verbose=0 # Suppresses the output

)

catboost_model.fit(x_train, y_train)

# Predictions on test set

y_pred_catboost = catboost_model.predict(x_test)

lgb_model = lgb.LGBMClassifier(

n_estimators=200,

learning_rate=0.001,

max_depth=5

)

lgb_model.fit(x_train, y_train)

# Predictions on test set

y_pred_lgb = lgb_model.predict(x_test)

from hmmlearn import hmm

HMMmodel = hmm.GaussianHMM(n_components=2, covariance_type="diag")

X_train = df_train['returns'].to_numpy().reshape(-1, 1)

HMMmodel.fit(X_train)

Z_train = HMMmodel.predict(X_train)

# transition matrix

HMMmodel.transmat_

# try to set the transition matrix intuitively

HMMmodel.transmat_ = np.array([

[0.999, 0.001],

[0.001, 0.999],

])

X_test = df_test['returns'].to_numpy().reshape(-1, 1)

Z_test = HMMmodel.predict(X_test)

df_test['HMMstate'] = Z_test

df_test['HMMsignal'] = np.where(df_test['HMMstate'] == 0, 1, 0)

y_test_xgb = (y_pred_xgb > 0.5).astype(int)

df_test['xgb_pred'] = y_test_xgb

y_test_rf = (y_pred_rf > 0.5).astype(int)

df_test['rf_pred'] = y_test_rf

y_test_cat = (y_pred_catboost > 0.5).astype(int)

df_test['cat_pred'] = y_test_cat

y_test_light = (y_pred_lgb > 0.5).astype(int)

df_test['light_pred'] = y_test_light

df_test['equity_xgb'] = np.cumprod(1+df_test['xgb_pred']*df_test['pct_change_future'])

df_test['equity_rf'] = np.cumprod(1+df_test['rf_pred']*df_test['pct_change_future'])

df_test['equity_cat'] = np.cumprod(1+df_test['cat_pred']*df_test['pct_change_future'])

df_test['equity_light'] = np.cumprod(1+df_test['light_pred']*df_test['pct_change_future'])

df_test['equity_buy_and_hold'] = np.cumprod(1+df_test['pct_change_future'])

df_test['equity_HMM'] = np.cumprod(1+df_test['HMMsignal']*df_test['pct_change_future'])

from plotly import graph_objects as go

fig = go.Figure()

fig.add_trace(

go.Line(x=df_test['Date'], y=df_test['equity_buy_and_hold'], name='Buy and Hold')

)

fig.add_trace(

go.Line(x=df_test['Date'], y=df_test['equity_xgb'], name='XGB')

)

fig.add_trace(

go.Line(x=df_test['Date'], y=df_test['equity_HMM'], name='HMM')

)

fig.add_trace(

go.Line(x=df_test['Date'], y=df_test['equity_rf'], name='Random Forest')

)

fig.add_trace(

go.Line(x=df_test['Date'], y=df_test['equity_cat'], name='CAT')

)

fig.add_trace(

go.Line(x=df_test['Date'], y=df_test['equity_light'], name='Light')

)

fig.update_layout(

title_text='Models Backtest',

legend={'x': 0, 'y':-0.05, 'orientation': 'h'},

xaxis={'title': 'Date'},

yaxis={'title': 'Multiple from Initial Investment'}

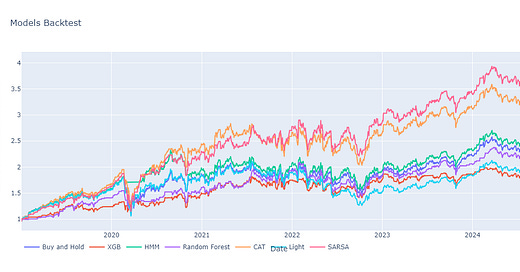

)On the performance chart above we can see that the CAT model and HMM model end up beating the buy-and-hold benchmark on the test dataset. Now let’s have these models make predictions on the validation dataset and then train our SARSA algorithm using these predictions.

X_valHMM = df_val['returns'].to_numpy().reshape(-1, 1)

Z_val = HMMmodel.predict(X_valHMM)

df_val['state'] = Z_val

df_val['HMMsignal'] = np.where(df_val['state'] == 0, 1, 0)

y_val_pred_lgb = lgb_model.predict(x_val)

y_val_pred_catboost = catboost_model.predict(x_val)

y_val_pred_rf = rf_model.predict(x_val)

y_val_pred_xgb = xgb_model.predict(x_val)

y_val_xgb = (y_val_pred_xgb > 0.5).astype(int)

df_val['xgb_pred'] = y_val_xgb

y_val_rf = (y_val_pred_rf > 0.5).astype(int)

df_val['rf_pred'] = y_val_rf

y_val_cat = (y_val_pred_catboost > 0.5).astype(int)

df_val['cat_pred'] = y_val_cat

y_val_light = (y_val_pred_lgb > 0.5).astype(int)

df_val['light_pred'] = y_val_lightNow that we have the predictions from the models, let’s initialize the SARSA parameters and create the algorithm.

My goal is to provide you with the tools that will give you an edge in the markets. Follow the link below to get 10% off for the next 12 months.

Become a paid subscriber to receive:

Trading indicators and strategies. Full, ready-to-use code for your investing — no black boxes or holy grails, just full transparency and ownership of your advantage.

Weekly newsletter covering current market conditions. Analysis on economic trends, key data releases, and actionable insights to stay ahead of market shifts.